Schrödinger equation

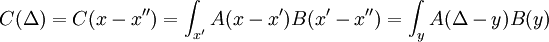

2008/9 Schools Wikipedia Selection. Related subjects: Physics

| Quantum mechanics | ||||||||||||||||

|

||||||||||||||||

| Uncertainty principle |

||||||||||||||||

| Introduction to... Mathematical formulation of...

|

||||||||||||||||

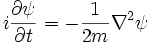

In physics, especially quantum mechanics, the Schrödinger equation is an equation that describes how the quantum state of a physical system changes in time. It is as central to quantum mechanics as Newton's laws are to classical mechanics.

In the standard interpretation of quantum mechanics, the quantum state, also called a wavefunction or state vector, is the most complete description that can be given to a physical system. Solutions to Schrödinger's equation describe atomic and subatomic systems, electrons and atoms, but also macroscopic systems, possibly even the whole universe. The equation is named after Erwin Schrödinger, who discovered it in 1926.

Schrödinger's equation can be mathematically transformed into Heisenberg's matrix mechanics, and into the Feynman's path integral formulation. The Schrödinger equation describes time in a way that is inconvenient for relativistic theories, a problem which is less severe in Heisenberg's formulation and completely absent in the path integral.

The Schrödinger equation

There are several different Schrödinger equations.

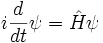

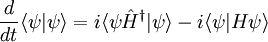

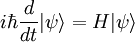

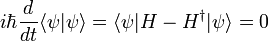

General quantum system

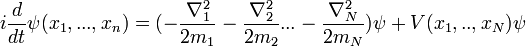

For a general quantum system:

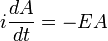

where

-

- ψ is the wavefunction, which is the probability amplitude for different configurations.

is Planck's constant over 2π, and it can be set to a value of 1 when using natural units.

is Planck's constant over 2π, and it can be set to a value of 1 when using natural units. is the Hamiltonian operator.

is the Hamiltonian operator.

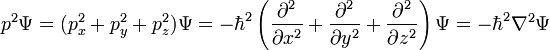

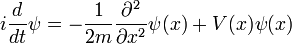

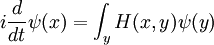

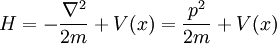

Single particle in three dimensions

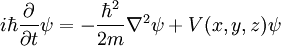

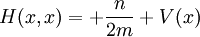

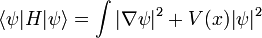

For a single particle in three dimensions:

where

-

- ψ is the wavefunction, which is the amplitude for the particle to have a given position at any given time.

- m is the mass of the particle.

- V(x,y,z) is the potential energy the particle has at each position.

Historical background and development

Einstein interpreted Planck's quanta as photons, particles of light, and proposed that the energy of a photon is proportional to its frequency, a mysterious wave-particle duality. Since energy and momentum are related in the same way as frequency and wavenumber in relativity, it followed that the momentum of a photon is proportional to its wavenumber.

DeBroglie hypothesized that this is true for all particles, for electrons as well as photons, that the energy and momentum of an electron are the frequency and wavenumber of a wave. Assuming that the waves travel roughly along classical paths, he showed that they form standing waves only for certain discrete frequencies, discrete energy levels which reproduced the old quantum condition.

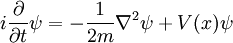

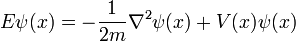

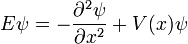

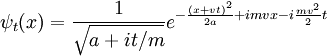

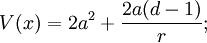

Following up on these ideas, Schrödinger decided to find a proper wave equation for the electron. He was guided by Hamilton's analogy between mechanics and optics, encoded in the observation that the zero-wavelength limit of optics resembles a mechanical system--- the trajectories of light rays become sharp tracks which obey a principle of least action. Hamilton believed that mechanics was the zero-wavelength limit of wave propagation, but did not formulate an equation for those waves. This is what Schrödinger did, and a modern version of his reasoning is reproduced in the next section. The equation he found is (in natural units):

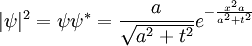

Using this equation, Schrödinger computed the spectral lines for hydrogen by treating a hydrogen atom's single negatively charged electron as a wave,  , moving in a potential well, V, created by the positively charged proton. This computation reproduced the energy levels of the Bohr model.

, moving in a potential well, V, created by the positively charged proton. This computation reproduced the energy levels of the Bohr model.

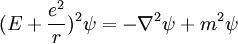

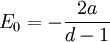

But this was not enough, since Sommerfeld had already seemingly correctly reproduced relativistic corrections. Schrödinger used the relativistic energy momentum relation to find what is now known as the Klein-Gordon equation in a Coulomb potential:

He found the standing-waves of this relativistic equation, but the relativistic corrections disagreed with Sommerfeld's formula. Discouraged, he put away his calculations and secluded himself in an isolated mountain cabin with a lover.

While there, Schrödinger decided that the earlier nonrelativistic calculations were novel enough to publish, and decided to leave off the problem of relativistic corrections for the future. He put together his wave equation and the spectral analysis of hydrogen in a paper in 1926.. The paper was enthusiastically endorsed by Einstein, who saw the matter-waves as the visualizable antidote to what he considered to be the overly formal matrix mechanics.

The Schrödinger equation tells you the behaviour of ψ, but does not say what ψ is. Schrödinger tried unsuccessfully, in his fourth paper, to interpret it as a charge density. In 1926 Max Born, just a few days after Schrödinger's fourth and final paper was published, successfully interpreted ψ as a probability amplitude. Schrödinger, though, always opposed a statistical or probabilistic approach, with its associated discontinuities; like Einstein, who believed that quantum mechanics was a statistical approximation to an underlying deterministic theory, Schrödinger was never reconciled to the Copenhagen interpretation.

Derivation

Short Heuristic Derivation

Assumptions

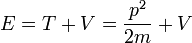

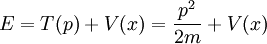

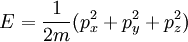

- (1) The total energy E of a particle is

-

- This is the classical expression for a particle with mass m where the total energy E is the sum of the kinetic energy,

, and the potential energy V. The momentum of the particle is p, or mass times velocity. The potential energy is assumed to vary with position, and possibly time as well.

, and the potential energy V. The momentum of the particle is p, or mass times velocity. The potential energy is assumed to vary with position, and possibly time as well.

- This is the classical expression for a particle with mass m where the total energy E is the sum of the kinetic energy,

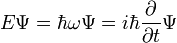

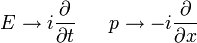

- Note that the energy E and momentum p appear in the following two relations:

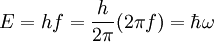

- (2) Einstein's light quanta hypothesis of 1905, which asserts that the energy E of a photon is proportional to the frequency f of the corresponding electromagnetic wave:

-

- where the frequency f of the quanta of radiation (photons) are related by Planck's constant h,

- and

is the angular frequency of the wave.

is the angular frequency of the wave. - and

- where the frequency f of the quanta of radiation (photons) are related by Planck's constant h,

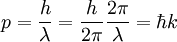

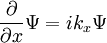

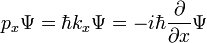

- (3) The de Broglie hypothesis of 1924, which states that any particle can be associated with a wave, represented mathematically by a wavefunction Ψ, and that the momentum p of the particle is related to the wavelength λ of the associated wave by:

-

- where

is the wavelength and

is the wavelength and  is the wavenumber of the wave.

is the wavenumber of the wave.

- where

Expressing p and k as vectors, we have

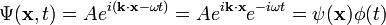

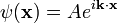

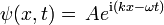

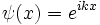

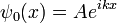

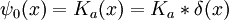

Expressing the wave function as a complex plane wave

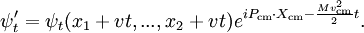

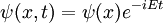

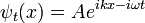

Schrödinger's great insight, late in 1925, was to express the phase of a plane wave as a complex phase factor:

- where

- and

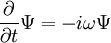

and to realize that since

then

and similarly since:

then

and hence:

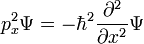

so that, again for a plane wave, he obtained:

And by inserting these expressions for the energy and momentum into the classical formula we started with we get Schrödinger's famed equation for a single particle in the 3-dimensional case in the presence of a potential V:

Longer Discussion

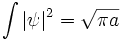

The particle is described by a wave, and in natural units, the frequency is the energy E of the particle, while the momentum p is the wavenumber k. These are not two separate assumptions, because of special relativity.

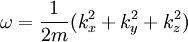

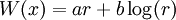

The total energy is the same function of momentum and position as in classical mechanics:

where the first term T(p) is the kinetic energy and the second term V(x) is the potential energy.

Schrödinger required that a Wave packet at position x with wavenumber k will move along the trajectory determined by Newton's laws in the limit that the wavelength is small.

Consider first the case without a potential, V=0.

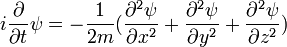

So that a plane wave with the right energy/frequency relationship obeys the free Schrodinger equation:

and by adding together plane waves, you can make an arbitrary wave.

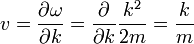

When there is no potential, a wavepacket should travel in a straight line at the classical velocity. The velocity v of a wavepacket is:

which is the momentum over the mass as it should be. This is one of Hamilton's equations from mechanics:

after identifying the energy and momentum of a wavepacket as the frequency and wavenumber.

To include a potential energy, consider that as a particle moves the energy is conserved, so that for a wavepacket with approximate wavenumber k at approximate position x the quantity

must be constant. The frequency doesn't change as a wave moves, but the wavenumber does. So where there is a potential energy, it must add in the same way:

This is the time dependent schrodinger equation. It is the equation for the energy in classical mechanics, turned into a differential equation by substituting:

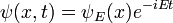

Schrödinger studied the standing wave solutions, since these were the energy levels. Standing waves have a complicated dependence on space, but vary in time in a simple way:

substituting, the time-dependent equation becomes the standing wave equation:

Which is the original time-independent Schrodinger equation.

In a potential gradient, the k-vector of a short-wavelength wave must vary from point to point, to keep the total energy constant. Sheets perpendicular to the k-vector are the wavefronts, and they gradually change direction, because the wavelength is not everywhere the same. A wavepacket follows the shifting wavefronts with the classical velocity, with the acceleration equal to the force divided by the mass.

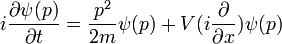

an easy modern way to verify that Newton's second law holds for wavepackets is to take the Fourier transform of the time dependent Schrodinger equation. For an arbitrary polynomial potential this is called the Schrodinger equation in the momentum representation:

The group-velocity relation for the fourier trasformed wave-packet gives the second of Hamilton's equations.

Versions

There are several equations which go by Schrodinger's name:

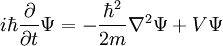

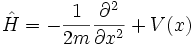

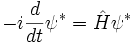

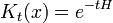

Time Dependent Equation

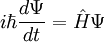

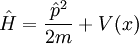

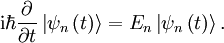

This is the equation of motion for the quantum state. In the most general form, it is written:

Where  is a linear operator acting on the wavefunction ψ.

is a linear operator acting on the wavefunction ψ.  takes as input one ψ and produces another in a linear way, a function-space version of a matrix multiplying a vector. For the specific case of a single particle in one dimension moving under the influence of a potential V:

takes as input one ψ and produces another in a linear way, a function-space version of a matrix multiplying a vector. For the specific case of a single particle in one dimension moving under the influence of a potential V:

and the operator H can be read off:

it is a combination of the operator which takes the second derivative, and the operator which pointwise multiplies ψ by V(x). When acting on ψ it reproduces the right hand side.

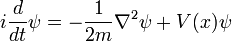

For a particle in three dimensions, the only difference is more derivatives:

and for N particles, the difference is that the wavefunction is in 3N-dimensional configuration space, the space of all possible particle positions.

This last equation is in a very high dimension, so that the solutions are not easy to visualize.

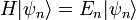

Time Independent Equation

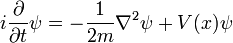

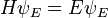

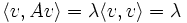

This is the equation for the standing waves, the eigenvalue equation for H. In abstract form, for a general quantum system, it is written:

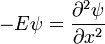

For a particle in one dimension, with the mass absorbed into rescaling either time or space:

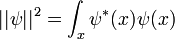

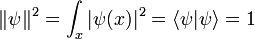

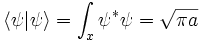

But there is a further restriction--- the solution must not grow at infinity, so that it has a finite norm:

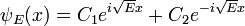

For example, when there is no potential, the equation reads:

which has oscillatory solutions for E>0 (the C's are arbitrary constants):

and exponential solutions for E<0

The exponentially growing solutions have an infinite norm, and are not physical. They are not allowed in a finite volume with periodic or fixed boundary conditions.

For a constant potential V the solution is oscillatory for E>V and exponential for E<V, corresponding to energies which are allowed or disallowed in classical mechnics. Oscillatory solutions have a classically allowed energy and correspond to actual classical motions, while the exponential solutions have a disallowed energy and describe a small amount of quantum bleeding into the classically disallowed region, to quantum tunneling. If the potential V grows at infinity, the motion is classically confined to a finite region, which means that in quantum mechanics every solution becomes an exponential far enough away. The condition that the exponential is decreasing restricts the energy levels to a discrete set, called the allowed energies.

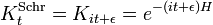

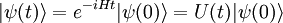

Energy Eigenstates

A solution  of the time independent equation is called an energy eigenstate with energy E:

of the time independent equation is called an energy eigenstate with energy E:

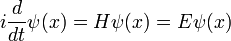

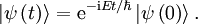

To find the time dependence of the state, consider starting the time-dependent equation with an initial condition ψE(x). The time derivative at t=0 is everywhere proportional to the value:

So that at first the whole function just gets rescaled, and it maintains the property that its time derivative is proportional to itself. So for all times,

substituting,

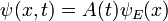

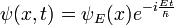

So that the solution of the time-dependent equation with this initial condition is:

or, with explicit  s.

s.

This is a restatement of the fact that solutions of the time-independent equation are the standing wave solutions of the time dependent equation. They only get multiplied by a phase as time goes by, and otherwise are unchanged.

Superpositions of energy eigenstates change their properties according to the relative phases between the energy levels.

Properties

First Order in Time

The Schrodinger equation describes the time evolution of a quantum state, and must determine the future value from the present value. A classical field equation can be second order in time derivatives, the classical state can include the time derivative of the field. But a quantum state is a full description of a system, so that the Schrodinger equation is always first order in time.

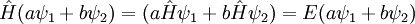

Linear

The Schrödinger equation is linear in the wavefunction: if ψ(x,t) and φ(x,t) are solutions to the time dependent equation, then so is aψ + bφ, where a and b are any complex numbers.

In quantum mechanics, the time evolution of a quantum state is always linear, for fundamental reasons. Although there are nonlinear versions of the Schrodinger equation, these are not equations which describe the evolution of a quantum state, but classical field equations like Maxwell's equations or the Klein-Gordon equation.

The Schrodinger equation itself can be thought of as the equation of motion for a classical field not for a wavefunction, and taking this point of view, it describes a coherent wave of nonrelativistic matter, a wave of a Bose condensate or a superfluid with a large indefinite number of particles and a definite phase and amplitude.

Real Eigenstates

The time-independent equation is also linear, but in this case linearity has a slightly different meaning. If two wavefunctions ψ1 and ψ2 are solutions to the time-independent equation with the same energy E, then any linear combination of the two is a solution with energy E. Two different solutions with the same energy are called degenerate.

In an arbitrary potential, there is one obvious degeneracy: if a wavefunction ψ solves the time-independent equation, so does ψ * . By taking linear combinations, the real and imaginary part of ψ are each solutions. So that restricting attention to real valued wavefunctions does not affect the time-independent eigenvalue problem.

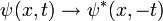

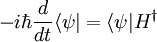

In the time-dependent equation, complex conjugate waves move in opposite directions. Given a solution to the time dependent equation ψ(x,t), the replacement:

produces another solution, and is the extension of the complex conjugation symmetry to the time-dependent case. The symmetry of complex conjugation is called time-reversal.

Unitary Time Evolution

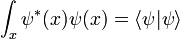

The Schrodinger equation is Unitary, which means that the total norm of the wavefunction, the sum of the squares of the value at all points:

has zero time derivative.

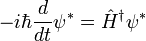

The derivative of ψ * is according to the complex conjugate equations

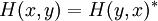

where the operator  is defined as the continuous analog of the Hermitian conjugate,

is defined as the continuous analog of the Hermitian conjugate,

For a discrete basis, this just means that the matrix elements of the linear operator H obey:

The derivative of the inner product is:

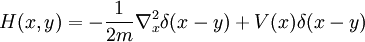

and is proportional to the imaginary part of H. If H has no imaginary part, if it is self-adjoint, then the probability is conserved. This is true not just for the Schrodinger equation as written, but for the Schrodinger equation with nonlocal hopping:

so long as:

the particular choice:

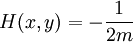

reproduces the local hopping in the ordinary Schrodinger equation. On a discrete lattice approximation to a continuous space, H(x,y) has a simple form:

whenever x and y are nearest neighbors. On the diagonal

where n is the number of nearest neighbors.

Positive Energies

The solutions of the Schrodinger equation in a potential which is bounded below have a frequency which is bounded below. For any linear operator  , the smallest eigenvector minimizes the quantity:

, the smallest eigenvector minimizes the quantity:

over all ψ which are normalized:

by the variational principle.

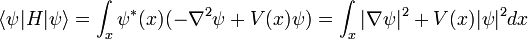

The value of the energy for the Schrodinger Hamiltonian is then the minimum value of:

after an integration by parts, and the right hand side is positive definite when V is positive.

Positive Definite Nondegenerate Ground State

For potentials which are bounded below and are not infinite over a region, there is a ground state which minimizes the integral above. This lowest energy wavefunction is real and positive definite.

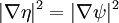

Suppose for contradiction that ψ is a lowest energy state and has a sign change, then η(x) = | ψ(x) | , the absolute value of ψ obeys

everywhere, and

except for a set of measure zero. So η is also a minimimum of the integral, and it has the same value as ψ. But by smoothing out the bends at the sign change, the gradient contribution to the integral is reduced while the potential energy is hardly altered, so the energy of η can be reduced, which is a contradiction.

The lack of sign changes also proves that the ground state is nondegenerate, since if there were two ground states with energy E which were not proportional to each other, a linear combination of the two would also be a ground state with a zero.

These properties allow the analytic continuation of the Schrodinger equation to be identified as a stochastic process, which can be represented by a path integral.

Completeness

The energy eigenstates form a basis--- any wavefunction may be written as a sum over the discrete energy states or an integral over continuous energy states, or more generally as an integral over a measure. This is the spectral theorem in mathematics, and in a finite state space it is just a statement of the completeness of the eigenvectors of a Hermitian matrix.

Local Conservation of Probability

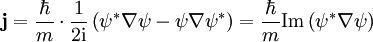

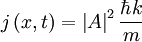

The probability density of a particle is ψ * (x)ψ(x). The probability flux is defined as:

in units of (probability)/(area × time).

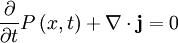

The probability flux satisfies the continuity equation:

where  is the probability density and measured in units of (probability)/(volume) = r−3. This equation is the mathematical equivalent of the probability conservation law.

is the probability density and measured in units of (probability)/(volume) = r−3. This equation is the mathematical equivalent of the probability conservation law.

For a plane wave:

So that not only is the probability of finding the particle the same everywhere, but the probability flux is as expected from an object moving at the classical velocity p / m.

The reason that the Schrodinger equation admits a probability flux is because all the hopping is local and forward in time.

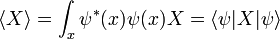

Heisenberg Observables

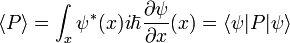

There are many linear operators which act on the wavefunction, each one defines a Heisenberg matrix when the energy eigenstates are discrete. For a single particle, the operator which takes the derivative of the wavefunction in a certain direction:

Is called the momentum operator. Multiplying operators is just like multiplying matrices, the product of A and B acting on ψ is A acting on the output of B acting on ψ.

An eigenstate of p obeys the equation:

for a number k, and for a normalizable wavefunction this restricts k to be real, and the momentum eigenstate is a wave with frequency k.

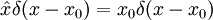

The position operator x multiplies each value of the wavefunction at the position x by x:

So that in order to be an eigenstate of x, a wavefunction must be entirely concentrated at one point:

In terms of p, the Hamiltonian is:

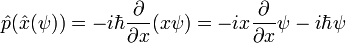

It is easy to verify that p acting on x acting on psi:

while x acting on p acting on psi reproduces only the first term:

so that the difference of the two is not zero:

or in terms of operators:

since the time derivative of a state is:

while the complex conjugate is

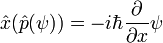

The time derivative of a matrix element

obeys the Heisenberg equation of motion. This establishes the equivalence of the Schrodinger and Heisenberg formalisms, ignoring the mathematical fine points of the limiting procedure for continuous space.

Correspondence principle

The Schrödinger equation satisfies the correspondence principle. In the limit of small wavelength wavepackets, it reproduces Newton's laws. This is easy to see from the equivalence to matrix mechanics.

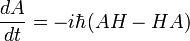

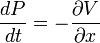

All operators in Heisenberg's formalism obey the quantum analog of Hamilton's equations:

So that in particular, the equations of motion for the X and P operators are:

in the Schrodinger picture, the interpretation of this equation is that it gives the time rate of change of the matrix element between two states when the states change with time. Taking the expectation value in any state shows that Newton's laws hold not only on average, but exactly, for the quantities:

Relativity

The Schrödinger equation does not take into account relativistic effects, as a wave equation, it is invariant under a Galilean transformation, but not under a Lorentz transformation. But in order to include relativity, the physical picture must be altered in a radical way.

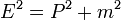

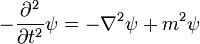

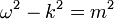

A naive generalization of the Schrodinger equation uses the relativistic mass-energy relation (in natural units):

to produce the differential equation:

which is relativistically invariant, but second order in ψ, and so cannot be an equation for the quantum state. This equation also has the property that there are solutions with both positive and negative frequency, a plane wave solution obeys:

which has two solutions, one with positive frequency the other with negative frequency. This is a disaster for quantum mechanics, because it means that the energy is unbounded below.

A more sophisticated attempt to solve this problem uses a first order wave equation, the Dirac equation, but again there are negative energy solutions. In order to solve this problem, it is essential to go to a multiparticle picture, and to consider the wave equations as equations of motion for a quantum field, not for a wavefunction.

The reason is that relativity is incompatible with a single particle picture. A relativistic particle cannot be localized to a small region without the particle number becoming indefinite. When a particle is localized in a box of length L, the momentum is uncertain by an amount roughly proportional to h/L by the uncertainty principle. This leads to an energy uncertainty of hc/L, when |p| is large enough so that the mass of the particle can be neglected. This uncertainty in energy is equal to the mass-energy of the particle when

and this is called the Compton wavelength. Below this length, it is impossible to localize a particle and be sure that it stays a single particle, since the energy uncertainty is large enough to produce more particles from the vacuum by the same mechanism that localizes the original particle.

But there is another approach to relativistic quantum mechanics which does allow you to follow single particle paths, and it was discovered within the path-integral formulation. If the integration paths in the path integral include paths which move both backwards and forwards in time as a function of their own proper time, it is possible to construct a purely positive frequency wavefunction for a relativistic particle. This construction is appealing, because the equation of motion for the wavefunction is exactly the relativistic wave equation, but with a nonlocal constraint that separates the positive and negative frequency solutions. The positive frequency solutions travel forward in time, the negative frequency solutions travel backwards in time. In this way, they both analytically continue to a statistical field correlation function, which is also represented by a sum over paths. But in real space, they are the probability amplitudes for a particle to travel between two points, and can be used to generate the interaction of particles in a point-splitting and joining framework. The relativistic particle point of view is due to Richard Feynman.

Feynman's method also constructs the theory of quantized fields, but from a particle point of view. In this theory, the equations of motion for the field can be interpreted as the equations of motion for a wavefunction only with caution--- the wavefunction is only defined globally, and in some way related to the particle's proper time. The notion of a localized particle is also delicate--- a localized particle in the relativistic particle path integral corresponds to the state produced when a local field operator acts on the vacuum, and exacly which state is produced depends on the choice of field variables.

Solutions

Some general techniques are:

- Perturbation theory

- The variational principle

- Quantum Monte Carlo methods

- Density functional theory

- The WKB approximation and semi-classical expansion

In some special cases, special methods can be used:

- List of quantum mechanical systems with analytical solutions

- Hartree-Fock method and post Hartree-Fock methods

- Discrete delta-potential method

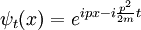

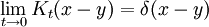

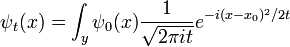

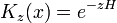

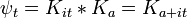

Free Schrödinger equation

When the potential is zero, the Schrödinger equation is linear with constant coefficients:

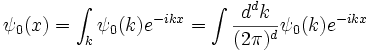

where  has been set to 1. The solution ψt(x) for any initial condition ψ0(x) can be found by Fourier transforms. Because the coefficients are constant, an initial plane wave:

has been set to 1. The solution ψt(x) for any initial condition ψ0(x) can be found by Fourier transforms. Because the coefficients are constant, an initial plane wave:

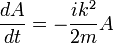

stays a plane wave. Only the coefficient changes. Substituting:

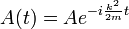

So that A is also oscillating in time:

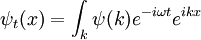

and the solution is:

Where ω = k2 / 2m, a restatement of DeBroglie's relations.

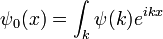

To find the general solution, write the initial condition as a sum of plane waves by taking its Fourier transform:

The equation is linear, so each plane waves evolves independently:

Which is the general solution. When complemented by an effective method for taking Fourier transforms, it becomes an efficient algorithm for finding the wavefunction at any future time--- Fourier transform the initial conditions, multiply by a phase, and transform back.

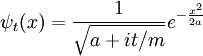

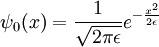

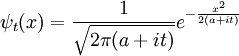

Gaussian Wavepacket

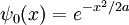

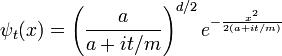

An easy and instructive example is the Gaussian wavepacket:

where a is a positive real number, the square of the width of the wavepacket. The total normalization of this wavefunction is:

The Fourier transform is a Gaussian again in terms of the wavenumber k:

With the physics convention which puts the factors of 2π in Fourier transforms in the k-measure.

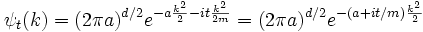

Each separate wave only phase-rotates in time, so that the time dependent Fourier-transformed solution is:

The inverse Fourier transform is still a Gaussian, but the parameter a has become complex, and there is an overall normalization factor.

The branch of the square root is determined by continuity in time--- it is the value which is nearest to the positive square root of a. It is convenient to rescale time to absorb m, replacing t/m by t.

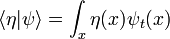

The integral of ψ over all space is invariant, because it is the inner product of ψ with the state of zero energy, which is a wave with infinite wavelength, a constant function of space. For any energy state, with wavefunction η(x), the inner product:

-

,

,

only changes in time in a simple way: its phase rotates with a frequency determined by the energy of η. When η has zero energy, like the infinite wavelength wave, it doesn't change at all.

The sum of the absolute square of ψ is also invariant, which is a statement of the conservation of probability. Explicitly in one dimension:

Which gives the norm:

which has preserved its value, as it must.

The width of the Gaussian is the interesting quantity, and it can be read off from the form of | ψ2 | :

-

.

.

The width eventually grows linearly in time, as  . This is wave-packet spreading--- no matter how narrow the initial wavefunction, a Schrodinger wave eventually fills all of space. The linear growth is a reflection of the momentum uncertainty--- the wavepacket is confined to a narrow width

. This is wave-packet spreading--- no matter how narrow the initial wavefunction, a Schrodinger wave eventually fills all of space. The linear growth is a reflection of the momentum uncertainty--- the wavepacket is confined to a narrow width  and so has a momentum which is uncertain by the reciprocal amount

and so has a momentum which is uncertain by the reciprocal amount  , a spread in velocity of

, a spread in velocity of  , and therefore in the future position by

, and therefore in the future position by  , where the factor of m has been restored by undoing the earlier rescaling of time.

, where the factor of m has been restored by undoing the earlier rescaling of time.

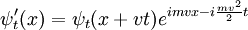

Galilean Invariance

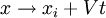

Galilean boosts are transformations which look at the system from the point of view of an observer moving with a steady velocity -v. A boost must change the physical properties of a wavepacket in the same way as in classical mechanics:

So that the phase factor of a free Schrodinger plane wave:

is only different in the boosted coordinates by a phase which depends on x and t, but not on p.

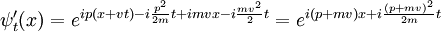

An arbitrary superposition of plane wave solutions with different values of p is the same superposition of boosted plane waves, up to an overall x,t dependent phase factor. So any solution to the free Schrodinger equation, ψt(x), can be boosted into other solutions:

Boosting a constant wavefunction produces a plane-wave. More generally, boosting a plane-wave:

produces a boosted wave:

Boosting the spreading Gaussian wavepacket:

produces the moving Gaussian:

Which spreads in the same way.

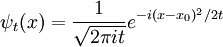

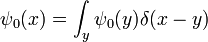

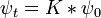

Free Propagator

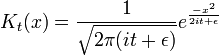

The narrow-width limit of the Gaussian wavepacket solution is the propagator K. For other differential equations, this is sometimes called the Green's function, but in quantum mechanics it is traditional to reserve the name Green's function for the time Fourier transform of K. When a is the infinitesimal quantity ε, the Gaussian initial condition, rescaled so that its integral is one:

becomes a delta function, so that its time evolution:

gives the propagator.

Note that a very narrow initial wavepacket instantly becomes infinitely wide, with a phase which is more rapidly oscillatory at large values of x. This might seem strange--- the solution goes from being concentrated at one point to being everywhere at all later times, but it is a reflection of the momentum uncertainty of a localized particle. Also note that the norm of the wavefunction is infinite, but this is also correct since the square of a delta function is divergent in the same way.

The factor of ε is an infinitesimal quantity which is there to make sure that integrals over K are well defined. In the limit that ε becomes zero, K becomes purely oscillatory and integrals of K are not absolutely convergent. In the remainder of this section, it will be set to zero, but in order for all the integrations over intermediate states to be well defined, the limit  is to only to be taken after the final state is calculated.

is to only to be taken after the final state is calculated.

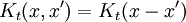

The propagator is the amplitude for reaching point x at time t, when starting at the origin, x=0. By translation invariance, the amplitude for reaching a point x when starting at point y is the same function, only translated:

In the limit when t is small, the propagator converges to a delta function:

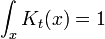

but only in the sense of distributions. The integral of this quantity multiplied by an arbitrary differentiable test function gives the value of the test function at zero. To see this, note that the integral over all space of K is equal to 1 at all times:

since this integral is the inner-product of K with the uniform wavefunction. But the phase factor in the exponent has a nonzero spatial derivative everywhere except at the origin, and so when the time is small there are fast phase cancellations at all but one point. This is rigorously true when the limit  is taken after everything else.

is taken after everything else.

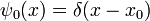

So the propagation kernel is the future time evolution of a delta function, and it is continuous in a sense, it converges to the initial delta function at small times. If the initial wavefunction is an infinitely narrow spike at position x0:

it becomes the oscillatory wave:

Since every function can be written as a sum of narrow spikes:

the time evolution of every function is determined by the propagation kernel:

And this is an alternate way to express the general solution. The interpretation of this expression is that the amplitude for a particle to be found at point x at time t is the amplitude that it started at x0 times the amplitude that it went from x0 to x, summed over all the possible starting points. In other words, it is a convolution of the kernel K with the initial condition.

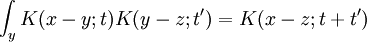

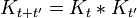

Since the amplitude to travel from x to y after a time t + t' can be considered in two steps, the propagator obeys the identity:

Which can be interpreted as follows: the amplitude to travel from x to z in time t+t' is the sum of the amplitude to travel from x to y in time t multiplied by the amplitude to travel from y to z in time t', summed over all possible intermediate states y. This is a property of an arbitrary quantum system, and by subdividing the time into many segments, it allows the time evolution to be expressed as a path integral.

Analytic Continuation to Diffusion

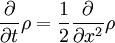

The spreading of wavepackets in quantum mechanics is directly related to the spreading of probability densities in diffusion. For a particle which is random walking, the probability density function at any point satisfies the diffusion equation:

where the factor of 2, which can be removed by a rescaling either time or space, is only for convenience.

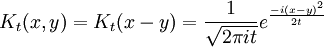

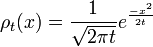

A solution of this equation is the spreading gaussian:

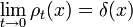

and since the integral of ρt, is constant, while the width is becoming narrow at small times, this function approaches a delta function at t=0:

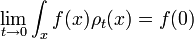

again, only in the sense of distributions, so that

for any smooth test function f.

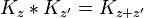

The spreading Gaussian is the propagation kernel for the diffusion equation and it obeys the convolution identity:

Which allows diffusion to be expressed as a path integral. The propagator is the exponential of an operator H:

which is the infinitesimal diffusion operator.

A matrix has two indices, which in continuous space makes it a function of x and x'. In this case, because of translation invariance, the matrix element K only depend on the difference of the position, and a convenient abuse of notation is to refer to the operator, the matrix elements, and the function of the difference by the same name:

Translation invariance means that continuous matrix multiplication:

is really convolution:

The exponential can be defined over a range of t's which include complex values, so long as integrals over the propagation kernel stay convergent.

As long as the real part of z is positive, for large values of x K is exponentially decreasing and integrals over K are absolutely convergent.

The limit of this expression for z coming close to the pure imaginary axis is the Schrodinger propagator:

and this gives a more conceptual explanation for the time evolution of Gaussians. From the fundamental identity of exponentiation, or path integration:

holds for all complex z values where the integrals are absolutely convergent so that the operators are well defined.

So that quantum evolution starting from a Gaussian, which is the diffusion kernel K:

gives the time evolved state:

This explains the diffusive form of the Gaussian solutions:

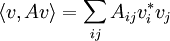

Variational Principle

The variational principle asserts that for any any Hermitian matrix A, the lowest eigenvalue minimizes the quantity:

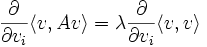

on the unit sphere < v,v > = 1. This follows by the method of Lagrange multipliers, at the minimum the gradient of the function is parallel to the gradient of the constraint:

which is the eigenvalue condition

so that the extreme values of a quadratic form A are the eigenvalues of A, and the value of the function at the extreme values is just the corresponding eigenvalue:

When the hermitian matrix is the Hamiltonian, the minimum value is the lowest energy level.

In the space of all wavefunctions, the unit sphere is the space of all normalized wavefunctions ψ, the ground state minimizes

or, after an integration by parts,

All the stationary points come in complex conjugate pairs since the integrand is real. Since the stationary points are eigenvalues, any linear combination is a stationary point, and the real and imaginary part are both stationary points.

The lowest energy state has a positive definite wavefunction, because given a ψ which minimizes the integral, | ψ | , the absolute value, is also a minimizer. But this minimizer has sharp corners at places where ψ changes sign, and these sharp corners can be rounded out to reduce the gradient contribution.

Potential and Ground State

For a particle in a positive definite potential, the ground state wavefunction is real and positive, and has a dual interpretation as the probability density for a diffusion process. The analogy between diffusion and nonrelativistic quantum motion, originally discovered and exploited by Schrodinger, has led to many exact solutions.

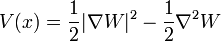

A positive definite wavefunction:

is a solution to the time-independent Schrodinger equation with m=1 and potential:

with zero total energy. W, the logarithm of the ground state wavefunction. The second derivative term is higher order in  , and ignoring it gives the semi-classical approximation.

, and ignoring it gives the semi-classical approximation.

The form of the ground state wavefunction is motivated by the observation that the ground state wavefunction is the Boltzmann probability for a different problem, the probability for finding a particle diffusing in space with the free-energy at different points given by W. If the diffusion obeys detailed balance and the diffusion constant is everywhere the same, the Fokker Planck equation for this diffusion is the Schrodinger equation when the time parameter is allowed to be imaginary. This analytic continuation gives the eigenstates a dual interpretation--- either as the energy levels of a quantum system, or the relaxation times for a stochastic equation.

Harmonic Oscillator

Main article: Quantum harmonic oscillator.

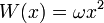

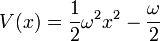

W should grow at infinity, so that the wavefunction has a finite integral. The simplest analytic form is:

with an arbitrary constant ω, which gives the potential:

This potential describes a Harmonic oscillator, with the ground state wavefunction:

The total energy is zero, but the potential is shifted by a constant. The ground state energy of the usual unshifted Harmonic oscillator potential:

is then the additive constant:

which is the zero point energy of the oscillator.

Coulomb Potential

Another simple but useful form is

where W is proportional to the radial coordinate. This is the ground state for two different potentials, depending on the dimension. In one dimension, the corresponding potential is singular at the origin, where it has some nonzero density:

and, up to some rescaling of variables, this is the lowest energy state for a delta function potential, with the bound state energy added on.

with the ground state energy:

and the ground state wavefunction:

In higher dimensions, the same form gives the potential:

which can be identified as the attractive Coulomb law, up to an additive constant which is the ground state energy. This is the superpotential that describes the lowest energy level of the Hydrogen atom, once the mass is restored by dimensional analysis:

where r0 is the Bohr radius, with energy

The ansatz

modifies the Coulomb potential to include a quadratic term proportional to 1 / r2, which is useful for nonzero angular momentum.

Operator Formalism

Bra-ket Notation

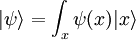

In the mathematical formulation of quantum mechanics, a physical system is fully described by a vector in a complex Hilbert space, the collection of all possible normalizable wavefunctions. The wavefunction is just an alternate name for the vector of complex amplitudes, and only in the case of a single particle in the position representation is it a wave in the usual sense, a wave in space time. For more complex systems, it is a wave in an enormous space of all possible worlds. Two nonzero vectors which are multiples of each other, two wavefunctions which are the same up to rescaling, represent the same physical state.

The wavefunction vector can be written in several ways:

-

- 1. as an abstract ket vector:

- 2. As a list of complex numbers, the components relative to a discrete list of normalizable basis vectors

:

: - 3. As a continuous superposition of non-normalizable basis vectors, like position states

:

: -

- 1. as an abstract ket vector:

The divide between the continuous basis and the discrete basis can be bridged by limiting arguments. The two can be formally unified by thinking of each as a measure on the real number line.

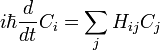

In the most abstract notation, the Schrödinger equation is written:

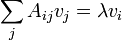

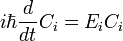

which only says that the wavefunction evolves linearly in time, and names the linear operator which gives the time derivative the Hamiltonian H. In terms of the discrete list of coefficients:

which just reaffirms that time evolution is linear, since the Hamiltonian acts by matrix multiplication.

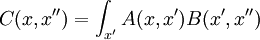

In a continuous representation, the Hamiltonian is a linear operator, which acts by the continuous version of matrix multiplication:

Taking the complex conjugate:

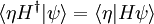

In order for the time-evolution to be unitary, to preserve the inner products, the time derivative of the inner product must be zero:

for an arbitrary state  , which requires that H is Hermitian. In a discrete representation this means that

, which requires that H is Hermitian. In a discrete representation this means that  . When H is continuous, it should be self-adjoint, which adds some technical requirement that H does not mix up normalizable states with states which violate boundary conditions or which are grossly unnormalizable.

. When H is continuous, it should be self-adjoint, which adds some technical requirement that H does not mix up normalizable states with states which violate boundary conditions or which are grossly unnormalizable.

The formal solution of the equation is the matrix exponential ( natural units):

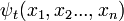

For every time-independent Hamiltonian operator,  , there exists a set of quantum states,

, there exists a set of quantum states,  , known as energy eigenstates, and corresponding real numbers En satisfying the eigenvalue equation.

, known as energy eigenstates, and corresponding real numbers En satisfying the eigenvalue equation.

This is the time-independent Schrodinger equation.

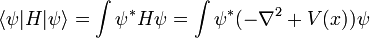

For the case of a single particle, the Hamiltonian is the following linear operator ( natural units):

which is a Self-adjoint operators when V is not too singular and does not grow too fast. Self-adjoint operators have the property that their eigenvalues are real in any basis, and their eigenvectors form a complete set, either discrete or continuous.

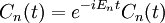

Expressed in a basis of Eigenvectors of H, the Schrodinger equation becomes trivial:

Which means that each energy eigenstate is only multiplied by a complex phase:

Which is what matrix exponentiation means--- the time evolution acts to rotate the eigenfunctions of H.

When H is expressed as a matrix for wavefunctions in a discrete energy basis:

so that:

The physical properties of the C's are extracted by acting by operators, matrices. By redefining the basis so that it rotates with time, the matrices become time dependent, which is the Heisenberg picture.

Galilean Invariance

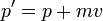

Galilean symmetry requires that H(p) is quadratic in p in both the classical and quantum Hamiltonian formalism. In order for Galilean boosts to produce a p-independent phase factor, px - Ht must have a very special form--- translations in p need to be compensated by a shift in H. This is only true when H is quadratic.

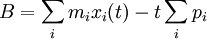

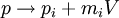

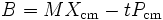

The infinitesimal generator of Boosts in both the classical and quantum case is:

where the sum is over the different particles, and B,x,p are vectors.

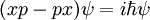

The poisson bracket/commutator of  with x and p generate infinitesimal boosts, with v the infinitesimal boost velocity vector:

with x and p generate infinitesimal boosts, with v the infinitesimal boost velocity vector:

Iterating these relations is simple, since they add a constant amount at each step. By iterating, the dv's incrementally sum up to the finite quantity V:

B divided by the total mass is the current center of mass position minus the time times the centre of mass velocity:

In other words, B/M is the current guess for the position that the centre of mass had at time zero.

The statement that B doesn't change with time is the centre of mass theorem. For a Galilean invariant system, the centre of mass moves with a constant velocity, and the total kinetic energy is the sum of the center of mass kinetic energy and the kinetic energy measured relative to the centre of mass.

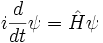

Since B is explicitly time dependent, H does not commute with B, rather:

this gives the transformation law for H under infinitesimal boosts:

the interpretation of this formula is that the change in H under an infinitesimal boost is entirely given by the change of the centre of mass kinetic energy, which is the dot product of the total momentum with the infinitesimal boost velocity.

The two quantities (H,P) form a representation of the Galilean group with central charge M, where only H and P are classical functions on phase-space or quantum mechanical operators, while M is a parameter. The transformation law for infinitesimal v:

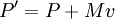

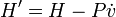

can be iterated as before--- P goes from P to P+MV in infinitesimal increments of v, while H changes at each step by an amount proportional to P, which changes linearly. The final value of H is then changed by the value of P halfway between the starting value and the ending value:

The factors proportional to the central charge M are the extra wavefunction phases.

Boosts give too much information in the single-particle case, since Galilean symmetry completely determines the motion of a single particle. Given a multi-particle time dependent solution:

with a potential that depends only on the relative positions of the particles, it can be used to generate the boosted solution:

For the standing wave problem, the motion of the center of mass just adds an overall phase. When solving for the energy levels of multiparticle systems, Galilean invariance allows the centre of mass motion to be ignored.

![[x,p] = xp - px = i \hbar

\,](../../images/756/75611.png)

![{d\over dt} \langle \eta | A |\psi \rangle = - \eta \hat H A \psi + \eta AH \psi = [H,A]

\,](../../images/756/75614.png)

![[B\cdot v ,x_i] = vt

\,](../../images/757/75726.png)

![[B\cdot v ,p_i] = v m_i

\,](../../images/757/75727.png)

![{dB\over dt} = [H,B] + {\partial B \over \partial t} = 0

\,](../../images/757/75731.png)

![[B\cdot v,H] = - P_\mathrm{cm} v

\,](../../images/757/75732.png)